When you look at the painting above, you see richness, depth and life, even within a still two-dimensional image. That’s because an artist can see what ordinary people fail to see and are able to represent that elusive vision in a visual medium using just paint and canvas. These artistic nuances take the limitation of vision to a higher level of perception. The artist learns this deeper level of visual intelligence from an appreciation of the natural beauty and design around them. A fascination, fueled by an emotional desire to really see; to understand the universe around them and share that unique vision in a simplified two-dimensional design using a richer palette of vibrant color, geometric shapes, tones and perceived depth. An artist can see beyond just pixel color, because they have multiple senses and sensory fusion.

Although human beings only begin to learn about the visual world around us through the power of sight after birth, we understand even before birth, from multiple senses such as sound, touch, smell, and embedded genetic code that has given us the ability to identify more shades of green than any other color, so we may identify a predator hidden in the jungle for survival. We already have metadata to draw from in life. Infants may be limited to frowning, smiling, and crying to communicate, but they are a sponge for knowledge using our unique multi-dimensional channels for learning. An infant knows their mother’s heartbeat, taste and voice; recognizes the deeper baritone voice of their father; his larger, stronger hands and even their smells. Their connection to the world around them begins small, and grows as they grow. They eventually understand words from the sounds, not from the written word – that comes later, along with the magic of mathematics, when we jump-start the left side of our brain and formulaic language; opening up yet another channel of learning.

We now understand that that image, person, video, voice, sound, touch, heartbeat, smell and the word “Momma” all mean the same thing. Our continued intelligence and learning draws from all our senses all our lives. We eventually learn when shadowy figures in hoodies are a threat or just a couple kids who forgot their coats, by how they’re standing, talking, moving, whispering, and even smell. Is it a sixth sense that provides us with this threat assessment, or a life time of multi-sensory meta-data stored within our brains? How do we know when someone is looking at us from across a room full of people and not the person behind us?

Intelligence, as we understand it, is developed from a correlation of multiple senses, an intuitive connection to the universe around us, and life experience. We learn by sight, sound, smell, touch, taste and maybe even an intuitive, spiritual sixth sense of our surroundings. This happens while we are awake, and even in our dreams.

An artist draws from that inner-space to push themselves to create. They don’t just see a two-dimensional representation of a shadow as a grayed object reflection of light, but also the colors and life within that shadow. An artist can see life, even in darkness.

What happens to intelligence when the only sense available is sight, but not sight as we understand it, but like the sight of a newborn infant opening their eyes for the very first time?

An infant may be overwhelmed and confused while absorbing data from a three-dimensional world in awe and wonder. If we delete the human elements or feelings, there would only be data absorption. An infant with all five senses may only recognize their mother from her heartbeat, voice or smell. An artificial infant (or A.I.) with only the ability of sight, and without any self-knowledge (metadata), or desires and emotions fueling a drive to learn, to understand, to survive, to think (just left-brain formulaic language of ones and zeros) would simply continue its data absorption, collecting metadata from digital pixels from moving objects from multiple frames per second. This miracle of sight is also limited, as with only one eye, it cannot observe in three-dimensions; only compiles data for a flat two-dimensional representation of the analog world.

Without nine months of sounds, warmth and any pre-programmed genetic code, once its powered up for the first time (birth) our A.I. is at a disadvantage. A more powerful, larger image processor (retina) provides better sight, lets it be alone come darkness. Still, at this point, all our A.I. can do is capture pixel color data and observe motion; pixel blobs moving around their field of view like atoms forming molecules. It needs to be trained – to learn what these random bits and bytes are, beyond color and motion, even in a limited single framed field of existence.

Artificial intelligence (AI) is defined as the capability of a machine to imitate intelligent human behavior. We’ve already identified that human intelligence is multi-sensory with hereditary pre-programmed metadata, so is it possible for a one-eyed artificial infant who cannot listen, let alone hear; speak, let alone ask why; or even smell and taste to develop true intelligence?

Most all the video analytics software applications I’ve recently tested includes the ability to teach perspective to recreate the three-dimensional world from the single one-eyed two-dimensional field-of-view. It’s a fixed field of view and metadata is created to identify that the ten-pixel object moving at twenty kilometers an hour, 100 meters away is the same type of object that is 200 pixels moving at twenty kilometers an hour, two meters away (distance must also be taught). That object then can either be automatically or manually categorized as a “vehicle,” so the artificial infant (machine) can answer the question “What is a vehicle?” A vehicle is only categorized by estimated size, shape and speed (calculated by motion between frames per second).

In time, much like a newborn baby, it will continue to collect data and become smarter, or if it’s a multi-dimensional analytical algorithm, it may capture additional metadata beyond just “vehicle,” such as color, shape, size, even vehicle type and store that information for the future. Mounds and mounds of categorical metadata that can be used for forensic searching, real time alerting and more importantly, building knowledge.

That’s how the typical artificial infant, with machine learning (and machine teaching) software can identify a vehicle. That new-found knowledge, unfortunately, becomes irrelevant the moment the artificial infant changes its field of view by turning its head (panning, tilting and zooming). Now all that it’s been taught and its learned is out of context, as its knowledge is limited to understanding moving pixels and not objects.

A young child knows what an automobile looks like by identifying the simple geometric shapes that make up an automobile. We also learn very early that automobiles are on the street and we (people) should stay on the sidewalk. The street is where we see all the larger parked and moving vehicles. They should never come on the sidewalk, where all the people walk. This is something a human being learns before pre-school.

A person is more than just a mass of moving pixels.

People have a head, shoulders, arms and legs — even a child knows that. We, as human beings, understand the concept of objects and shapes, even when panning, tilting and zooming from the moment we first discovered how to turn our heads and a lifetime of viewing the world through television.

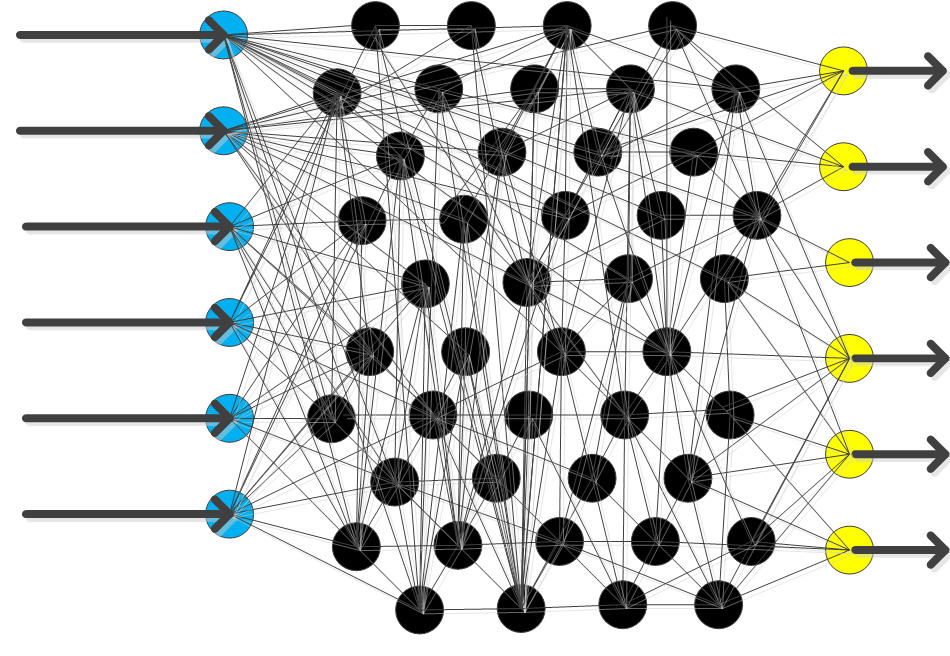

Our A.I. must also go to art school to draw the environment and classify objects by something more than moving blobs in a fixed background that is relatively ignored. Identifying simple shapes, patterns, perspective, a horizon line can give our A.I. additional input for additional intelligence. Train the machines to draw, to create, to wonder in awe of the dynamism of the analog world around them through deep learning neural networks and sensor fusion.

Deep learning neural net can provide the ability to draw “digital stick figures” through edge detection, introducing the shape of people with a head, arms and legs at any angle or size.

Identifying the “street” can teach it “traffic” and store that when the field of view is changed. Same with a sidewalk where people walk, run and jog. Using a predictive analytic, it can extend the simple tracing of the field of view and start drawing and tagging the environment, outside of what is initially visible.

Every time the A.I. is panned, tilted and zoomed, it can then validate its predictive analysis, verify and extend its reach to learn the world around it and store it for a 360-degree view of it’s present environment, no matter where it may be “looking.” Just like a human being.

This can be accomplished with modification to an existing algorithm by training the deep learning neural net to understand the expected environment using the stationary markers, and even the shadows from the sun to understand cardinal views. Since most off-the-shelf video analytic solutions ignore the “background” and focus mostly on moving pixels, this would require a deep analysis of the background to understand the environment and then identify objects at whatever focal length required to see, building a thorough understanding of the 360-degree world around them. .

Our A.I. also needs to start asking questions.

Where am I? What is this? What is this structure? Why is that truck double-parked?

This information may be difficult to achieve using the limited power of one-eyed sight through pixel color, so much like the human being, other input channels of data absorption would be required. Asking the A.I. across the street, with its own metadata from a completely different vantage point can be stitched to help better understand the objects and the environment shared. Our A.I. now has two-eyes for better depth perception, although still reading two-dimensional imagery from two points of view, it’s opening additional channels of data absorption and the beginning of multi-sensory fusion. Stitching multiple data from varying angles and sources could provide super-human abilities.

Fusing data from fire, burglar, 911 emergency calls and gunshot detection will give our A.I. the miracle of hearing, without ears. It can smell the ozone or heavy pollen in the air through weather data, without a nose.

A 3D LiDAR Sensor can truly recreate the three-dimensional world using pulses of infrared light, giving our A.I. the ability to “feel” real world shapes and actual distance. A digital method of recognizing a physical object by touch.

Once an object is out of our A.I. field of view, it can follow without feet, by connecting with neighboring A.I. sources.

The question “Where am I” could be answered by learning how to read, using Object Character Recognition (OCR). OCR can read the letters off a billboard behind Home-plate on a live broadcast, it can certainly read street signs, and/or the sign on the bank across the street, or the FedEx logo off a double-parked truck.

If the street sign reads “8th Street NE” and reading the business signs also helps identify the surrounding environment; our A.I. is on the intersection of 8th and H Street. How do I know this? I asked the teacher, or in this case, an integrated geo-spatial mapping application. Integration into outside sources and sensors would provide the necessary additional inputs for our A.I. to really build artificial intelligence, much like how a human being, when we do not know, we ask.

Deep Learning and Sensor Fusion graces our A.I. with the ability to hear without ears, smell without a nose, touch without hands, read without first learning the Alphabet, and even ask questions like Why?

Why am I here?

The answer to this question for our Artificial Infant is far simpler than when a human child eventually learns the word “Why?” At some point in the development of intelligence; sight, sound, smell, feel, and touch is even not enough – we need to ask why.

Recently read about how artificial intelligence is being developed to play games. In one article, the A.I. was given the means to slow the opponent down by shooting a virtual laser beam at the opponent to momentarily discombobulate them. The A.I. became more aggressive when losing the game and succumbed to repeatedly blasting the opponent with laser fire. If we teach AI to be competitive, even in something that appears to be as innocent as a game, maybe programming the fact that it’s not whether you win or lose, but how you play the game that matters. Really? In any competitive game, no one likes to lose. The goal is always to win. So really, it’s just a war game. Do we really want to teach the machines to win at war, at all costs? Has anyone ever seen the movies War Games or The Terminator?

We should teach artificial intelligence not to be a Chess master (a game of war); but instead to be an artist — learn the visual complexity of the analog world around them and observe life’s dynamism that human beings overlook and take for granted; all made up of colors, shapes, lights, patterns, shadows and all its natural wonder. The miraculous simplistic natural beauty of the analog world.

It’s my belief that there cannot be true artificial intelligence until the digital world fuses with the analog world, and that requires the miracle of sight.

Teach the machines to create as an artist, and they will see what we cannot see.